With the latest release of Ubuntu, I thought it would be the perfect time to talk about server upgrades. Specifically, I’m going to share with you the process that I’m using to perform upgrades.

I don’t shy away from work, but I hate doing work that really isn’t needed. That’s why my first question when it comes to upgrades is:

Is this upgrade even necessary?

The first thing to know is the EOL (End of Life) for support for the OS you’re using. Here are the current EOLs for Ubuntu:

Ubuntu 14.04 LTS: April 2019

Ubuntu 16.04 LTS: April 2021

Ubuntu 18.04 LTS: April 2023

Ubuntu 20.04 LTS: April 2025

Ubuntu 22.04 LTS: April 2027

Ubuntu 24.04 LTS: April 2029

By the way, Red Hat Enterprise Linux delivers at least 10 years of support for their major releases. This is just one example why you’ll find RHEL being used in large organizations.

So, if you are thinking of upgrading from Ubuntu 22.04 to 24.04, consider if the service that server provides is even needed beyond April 2027. If the server is going away in the next couple of years, then it probably isn’t worth your time to upgrade it.

If you do decide to go ahead with the upgrade, then…

Determine What Software Is Being Used

Hopefully, you have a script or used some sort of documented process to build the existing server. If so, then you have a good idea of what’s already on the server.

If you don’t, it’s time to start researching.

Look at the running processes with the “ps” command. I like using “ps -ef” because it shows every process (-e) with a full-format listing (-f).

ps -ef

Look at any non-default users in /etc/passwd. What processes are they running? You can show the processes of a given user by using the “-u” option to “ps.”

ps -fu www-data ps -fu haproxy

Determine what ports are open and what processes have those ports open:

sudo netstat -nutlp sudo lsof -nPi

Look for any cron jobs being used.

sudo ls -lR /etc/cron* sudo ls -lR /var/spool/cron

Look for other miscellaneous clues such as disk usage and sudo configurations.

df -h sudo du -h /home | sort -h sudo cat /etc/sudoers sudo ls -l /etc/sudoers.d

Determine the Current Software Versions

Now that you have a list of software that is running on your server, determine what versions are being used. Here’s an example list for an Ubuntu 18.04 system:

- HAProxy 1.8.8

- Nginx 1.14.0

- MariaDB 10.1.44

One way to get the versions is to look at the packages like so:

dpkg -l haproxy nginx mariadb-server

Determine the New Software Versions

Now it’s time to see what version of each piece of software ships with the new distro version. For ubuntu 18.04 you can use “apt show PKG_NAME”:

apt show HAProxy

To display just the version, grep it out like so:

apt show HAProxy | grep -i version

Here’s our list for Ubuntu 18.04:

- HAProxy 1.8.81

- Nginx 1.14.0

- MariaDB 10.1.29

Read the Release Notes

Now, find the release notes for each version of each piece of software. In this example, we are upgrading HAProxy from 1.6.3 to 1.8.81. Most software these days conform to Semantic Versioning guidelines. In short, given a version number MAJOR.MINOR.PATCH, increment the:

MAJOR version when you make incompatible API changes,

MINOR version when you add functionality in a backwards-compatible manner, and

PATCH version when you make backwards-compatible bug fixes.

This means we’re the most concerned with major versions and somewhat concerned with minor versions, and we can pretty much ignore the patch version. This means we can think of this upgrade as being from 1.6 to 1.8.

Because it’s the same major version (1), we should be fine to just perform the upgrade. That’s the theory, anyway. It doesn’t always work in practice.

In this case, read the release notes for HAProxy versions 1.7 and 1.8. Look for any signs of backward compatibility issues such as configuration syntax changes. Also look for new default values and then consider how those new default values could affect the environment.

Repeat this process for the other major pieces of software. In this example that would be going from Nginx 1.10 to 1.14 and MariaDB 10.0 to 10.1.

Make Changes Based on the Release Notes

Based on the information from the release notes, make any required or desired adjustments to the configuration files.

If you have your configuration files stored in version control, make your changes there. If you have configuration files or modifications performed by your build scripts, make your changes there. If you aren’t doing either one of those, DO IT FOR THIS DEPLOYMENT/UPGRADE. 😉 Seriously, just make a copy of the configuration files and make your changes to them. That way you can push them to your new server when it’s time to test.

If you’re not sure what configuration file or files a given service uses, refer to its documentation. You can read the man page or visit the website for the software.

Also, you can list the contents of its package and look for “etc”, “conf”, and “cfg”. Here’s an example from an Ubuntu 16.04 system:

dpkg -L haproxy | grep -E 'etc|cfg|conf'

The “dpkg -L” command lists the files in the package while the grep command matches “etc”, “cfg”, or “conf”. The “-E” option is for extended regular expressions. The pipe (|) acts as an “or” in regular expressions.

You can also use the locate command.

locate haproxy | grep -E 'etc|cfg|conf'

In case you’re wondering, the main configuration file for haproxy is haproxy.cfg.

Install the Software on the Upgraded Server

Now install the major pieces of software on a new server running the new release of the distro.

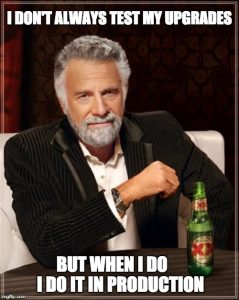

Of course, use a brand new server installation. You want to test your changes before you put them into production.

By the way, if you have a dedicated non-production (test/dev) network, use it for this test. If you have a service on the server you are upgrading that connects to other servers/services, it’s a good idea to isolate it from production. You don’t want to accidentally perform a production action when you’re testing. This means you may need to replicate those other servers in your non-production environment before you can fully test the particular upgrade that you’re working on.

If you have deployment scripts, you can use them to perform the installs. If you use Ansible or the like, use it against the new server. Or you can manually perform the install, making notes of all the commands you run so that you can put them in a script later on. For example, to manually install HAProxy on Ubuntu 20.04, run:

apt install -y haproxy

Next, put the configuration files in place.

Start the Services

If the software that you are installing is a service, make sure it starts at boot time.

sudo systemctl enable haproxy

Start the service:

sudo systemctl start haproxy

If your existing deployment script starts the service automatically, perform a restart to make sure that any of the new configuration file changes are being used.

sudo systemctl restart haproxy

See if the service is running.

sudo systemctl status

If it failed, read the error message and make the required corrections. Perhaps there is a configuration option that worked with the previous version that isn’t valid with the new version, for example.

Import the Data

If you have services that store data, such as a database service, then import test data into the system.

If you don’t have test data, then copy over your production data to the new server.

If you are using production data, you need to be very careful at this point.

1) You don’t want to accidentally destroy or alter any production data and…

2) You don’t want your new system taking any unwanted actions based on production data.

On point #1, you don’t want to make a costly mistake such as getting your source and destinations mixed up and end up overwriting (or deleting) production data. Pro tip: make sure you have good production backups that you can restore.

One point #2, you don’t want to do something like double charge the business’s customers or send out duplicate emails, etc. To this end, stop all the software and services that are not required for the import before you do it. For example, disable cron jobs and stop any in-house written software running on the test system that might kick off an action.

It’s a good idea to have TEST data. If you don’t have test data, perhaps you can use this upgrade as an opportunity to create some. Take a copy of the production data and anonymize it. Change real email addresses to fake ones, etc.

As previously mentioned, do your tests on a non-production network that cannot directly touch production.

Perform Service Checks

If you have a service availability monitoring tool (and why wouldn’t you???), then point it at the new server. Let it do its job and tell you if something isn’t working correctly. For example, you may have installed and started HAProxy, but perhaps it didn’t open up the proper port because you accidentally forgot to copy over the configuration.

Whether or not you have a service availability monitoring tool, use what you know about the service to see if it’s working properly. For example, did it open up the proper port or ports? (Use the “netstat” and “lsof” commands from above). Are there any error messages you should be concerned about?

If you’re at all familiar with the service, test it. If it’s a web server, does it serve up the proper web pages? If it’s a database server, can you run queries against it?

If you’re not the familiar with the service or a normal user of the service, it’s time to enlist help. If you have a team that is responsible for testing, hand it over to them. Maybe it’s time to for someone in the business who uses the service to check it out and see if it works as expected.

If you don’t have a regression testing process in place, now would be a good time to create one. The goal is to make changes and know that those changes haven’t broken the service. Upgrading the OS is a major change that has the potential to break things in a major way.

Prepare for Production

Once you’ve completed this entire process and tested your work, put all your notes into a production implementation plan. Use that plan as a checklist when you’re ready to go into production. It’s probably worth it to test your plan on another newly installed system to make sure everything goes smoothly. This is especially true when you are working on a really important system.

By the way, don’t think less of yourself for having a detailed plan and checklist. It actually shows your professionalism and commitment to doing good work.

For example, would you rather fly on a plane with a pilot who uses a checklist or one who just “wings it.” I don’t care how smart or talented that pilot is, I want them to double check their work when it comes to my life.

Yes, It’s a Lot of Work

You might be thinking to yourself, “Wow, this is a very tedious and time-consuming process.” And you’d be right.

If you want to be a good/great Linux professional, this is exactly what it takes. Attention to detail and hard work are part of the job.

The good news is that you get compensated in proportion to your professionalism and level of responsibility.

If it was fast and easy, everyone would be doing it, right?

Hopefully, this post gave you some ideas beyond just blindly upgrading and hoping for the best. 😉

Speaking of the best…. I wish you the best!

Jason

P.S. If you’re ready to level-up your Linux skills, check out the courses I created for you here.